A few months ago, I met someone whose story stayed with me. A friend of my cousin had recently received a cancer diagnosis. Amid the whirlwind of medical appointments, treatment plans, and the emotional weight of it all, she found an unexpected source of support: an AI chatbot. She told me that being able to talk about her fears, her questions, and her struggles with an AI helped her process what she was going through in ways that felt both immediate and judgment-free. The AI gave her a space to articulate thoughts she wasn’t yet ready to share with others, to ask questions she felt too vulnerable to voice aloud, and to feel heard in moments of overwhelming uncertainty. Her experience isn’t unique. More people are turning to AI not just for information or productivity, but for emotional support. And while this trend raises important questions about the role of technology in our most human moments, it also reveals something profound about the gaps AI is filling in our lives and the careful balance we must strike between embracing its benefits and maintaining our capacity to think and choose for ourselves. We’re living through a quiet revolution in how people seek emotional support. Whether it’s processing a difficult diagnosis, working through relationship challenges, managing anxiety, or simply navigating the everyday stresses of modern life, AI has become an unexpected confidant for millions. This is happening now, in conversations that unfold on smartphones and computers around the world. But why? What is it about AI that makes people feel comfortable sharing their deepest concerns with lines of code? The answer lies in understanding the structural gaps in how we currently provide emotional and psychological support. AI offers something that traditional support systems often struggle to provide: constant availability. Mental health crises don’t operate on business hours, and neither do the moments when we most need to process our emotions. AI is there at 3 AM when anxiety keeps you awake, or during a lunch break when you are processing difficult news, or in the quiet moments when everyone else in your life seems too busy. This democratisation of emotional support is particularly significant. Professional therapy remains financially out of reach for many people. Even in countries with robust healthcare systems, waiting lists for mental health services can stretch for months. AI doesn’t replace these services; it can’t and should not. But it can provide a form of support in the interim, a bridge when professional help isn’t immediately accessible. There is also the reality of social exhaustion. Our friends and family care about us, but they have their own challenges and limited bandwidth. Sometimes we hesitate to burden them with our problems, especially if we’ve already leaned on them recently. AI offers support without the social calculus of whether we’re asking too much or imposing on someone’s time.The phenomenon of AI companionship

The gaps AI fills

Perhaps most importantly, AI creates what might be called a ‘judgment-free zone’. The stigma surrounding mental health has improved, but it hasn’t disappeared. People still struggle to discuss depression, anxiety, addiction, trauma, or even everyday failures without fear of judgment. With AI, there’s no social risk. You won’t see disappointment in its response. It won’t gossip about your struggles or change how it views you. For someone working through shame or stigma, this psychological safety can be transformative.

My own experience with AI support reinforced something I had suspected: sometimes the act of articulating our problems is itself therapeutic. When difficult things happen in our lives, we can find value not just in AI’s responses, but in the process of organising our thoughts enough to explain them. This is what therapists call ‘narrative coherence’, the ability to construct a story about what we’re experiencing that makes sense to us. AI serves as a thinking partner in this process. By asking clarifying questions, reflecting back on what we’ve said, or suggesting alternative perspectives, we can help ourselves see our situations from different angles. It’s not unlike the ancient practice of talking to ourselves, but with the benefit of an interactive response that can help us dig deeper. This interaction helps bridge the gap between our internal thoughts and the external world, occupying a unique space of cognitive proximity to our own minds.

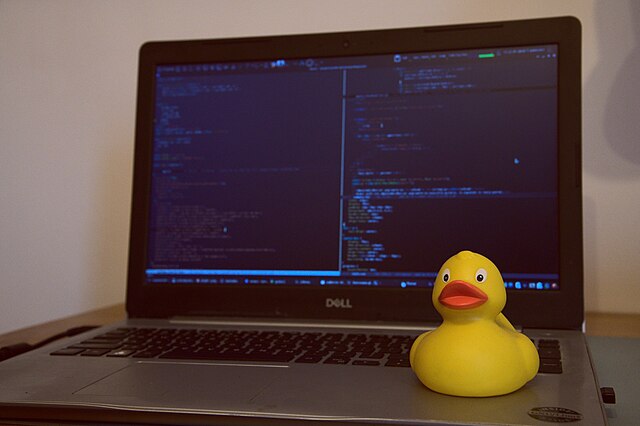

There’s also what programmers call ‘rubber duck debugging’, explaining a problem aloud to an inanimate object (traditionally a rubber duck on one’s desk), which often reveals the solution. AI takes this concept further, actively engaging with what we share and helping us identify patterns or contradictions in our thinking that we might have missed.

For some people, AI serves a rehearsal function. Before having a difficult conversation with a partner, family member, or colleague, they can practice with AI and test different approaches, work through their emotions, and build confidence. Before disclosing a diagnosis to loved ones, they can explore how to frame it. This preparatory work can make the eventual human conversation more productive and less overwhelming.

This might all sound very modern, but in reality, humans have always sought ways to externalise and process their emotions. We’ve prayed to gods and saints, confessed to priests, kept private journals, talked to our pets, and spent long hours in solitary contemplation. The need to articulate our inner lives, to feel heard, and to make sense of our experiences is as old as consciousness itself. AI is simply the latest tool in this ancient human practice. Just as writing allowed us to externalise our thoughts in permanent form, and telephones allowed us to connect across distances, AI allows us to engage in a kind of dialogue that was previously impossible, one that’s infinitely patient, instantly available, and capable of drawing on vast repositories of human knowledge and experience.

The innovation isn’t in the need, which has always existed. It’s in the accessibility and form of the response. Where journaling requires us to generate insights entirely on our own, and where human confidants bring their own experiences and biases, AI occupies a middle space: responsive but not judgmental, informed but not directive, available but not intrusive.

Here’s where we must be clear-eyed and careful. The value of AI companionship depends entirely on how we use it. This is true of every powerful tool humans have created. A knife can prepare a meal or cause harm. Medication can heal or poison, depending on the dose and application. The tool itself is neutral; its impact depends on the wisdom and discernment of the person wielding it.

Swallowing an entire box of pills because medication can be helpful would be dangerous. Similarly, asking AI to guide major life decisions, provide medical diagnoses, or substitute for professional mental health care when that’s genuinely needed crosses important boundaries. The fact that some people might misuse AI, follow its suggestions blindly, become dependent on it for decisions they should make themselves, or avoid human connection altogether, does not negate its value for those who use it responsibly.

This is a crucial distinction that often gets lost in discussions about AI and mental health. Just because there will be cases of misuse doesn’t mean we should deny everyone access to the benefits. Instead, we need to build AI literacy and help people understand both what AI can offer and where its limitations lie.

So what does responsible use actually look like in practice?

First, it means recognising that AI doesn’t honestly care about you, even when it seems empathetic! Its responses are generated based on patterns in data, not genuine emotion or concern. This doesn’t make the support less valuable, but it does mean we shouldn’t mistake algorithmic empathy for human connection.

Second, it means understanding when to escalate. AI can help you process feelings of sadness, but if those feelings persist and interfere with your daily functioning, that’s when human professional help becomes necessary. AI can provide information about medical conditions, but it cannot diagnose or treat you. It can help you think through relationship problems, but it cannot replace the complex work of couples therapy with a trained professional.

Third, it means cross-checking important information. If AI suggests a coping strategy, research it. If it offers factual information that will inform your decisions, verify it through reliable sources. Treat AI as one input among many, not as an oracle.

Fourth, it means maintaining human connections alongside AI interactions. Using AI for support should supplement, not replace, your relationships with friends, family, and community. If you find yourself consistently choosing AI over human connection, that’s a signal to pause and reflect.

Finally, it means being mindful of privacy. While AI interactions can feel temporary and confidential, the data you share may be stored and used in ways you don’t fully understand. Be thoughtful about what you disclose, especially regarding others.

It’s worth acknowledging that comfort with AI companionship varies significantly across cultures and generations. Younger people who grew up with digital technology may find AI support entirely natural, while others might view it as dystopian or concerning. These different perspectives are valid and reflect broader questions about how technology is reshaping human connection.

We are living through a loneliness epidemic. Social isolation has been called a public health crisis, with impacts on physical and mental health comparable to smoking or obesity. Traditional community structures like extended families, religious congregations, and neighbourhood bonds have weakened in many societies. Work has become more isolating for many, with remote work and gig economies replacing office camaraderie.

In this context, AI companionship is not creating isolation but responding to isolation that already exists. Whether it helps or hinders depends on how we integrate it into our lives. Used wisely, it can be a bridge back to human connection, assisting people to process emotions and build confidence to reach out to others. Used unwisely, it could become a substitute that deepens isolation.

The rise of AI companionship tells us something important about contemporary life. It reveals the gaps in our support systems, the persistent stigma around vulnerability, and the structural barriers to accessing care. It shows us how many people are struggling in silence, seeking understanding wherever they can find it. But it also reveals something hopeful: the human capacity to adapt, to find new ways of meeting ancient needs, and to extend compassion, even to ourselves, through the medium of technology. The person who turns to AI in a moment of crisis is not abandoning their humanity; they are being resourceful about seeking support in whatever form is available to them.

As AI capabilities continue to progress, these questions will only become more pressing. We’ll need to think carefully about how to integrate AI support into existing mental health ecosystems, how to train people in AI literacy, and how to ensure that vulnerable populations aren’t exploited or harmed.

But we shouldn’t let perfect be the enemy of good. Right now, today, AI is helping people process difficult emotions, feel less alone, and build the courage to seek human help when they need it. For someone receiving a devastating diagnosis, facing a crisis, or simply struggling through a difficult season of life, having an AI to talk to might be precisely what they need in that moment.

The goal isn’t to replace human connection, empathy, or professional care. It’s to recognise that AI can play a valuable supplementary role, one more tool in our collective toolkit for supporting each other’s wellbeing and mental health.

As we explore this new area, we need to keep two important ideas in mind at the same time: AI companionship offers genuine value and deserves to be taken seriously, and it requires us to be thoughtful, discerning users who understand both its possibilities and its limits. The technology is here. The question is whether we can develop the wisdom to use it well, to let it enhance our humanity rather than replace it, to see it as a bridge to human connection rather than a substitute for it.

In the end, this is what humAInism is about: finding the intersection where technology serves human flourishing, where we remain the authors of our own stories, and where even in our most vulnerable moments, we remain free to choose how we seek support, process our experiences, and ultimately, how we heal.

Author: Slobodan Kovrlija