Artificial intelligence offers extraordinary power to create, decide, and optimise and yet its most significant challenge is moral, not technical. The tension between what we can do and what we should do is as old as civilisation itself, from the Garden of Eden to the age of algorithms. As AI begins to touch every domain of human life, the task before us is not only to regulate it, but to cultivate wisdom equal to its power. Every leap in human capability raises an older, quieter question: How far should we go with the power we acquire? Artificial intelligence now brings that timeless dilemma into focus with unprecedented clarity. When algorithms can write novels, compose symphonies, or diagnose illness more accurately than a doctor, we marvel at what is possible. Yet the deeper unease remains: are we building tools that serve human flourishing, or systems that slowly redefine what it means to be human? The conversation about AI’s creative reach or its impact on labour is, at its heart, a moral conversation. The technology itself has no intention or conscience. It simply magnifies our own. The question is not what AI will become, but what we will become through it. The earliest moral stories warned against knowledge untempered by wisdom. In the Garden of Eden, the fruit from the Tree of Knowledge offered understanding at the cost of innocence. Prometheus brought fire to humanity and suffered for overreaching. The builders of Babel dreamed of touching the heavens and lost their unity of speech.The ancient question reborn

From Eden to algorithms

These stories endure not because they oppose discovery, but because they reveal its cost when divorced from responsibility. Every age has replayed the same pattern: capability races ahead of conscience. Fire, steam, electricity, the atom, each transformed civilisation before moral reflection caught up. AI continues that sequence. We are no longer merely inventing tools to extend our reach; we are creating systems that may one day act, decide, and imagine in our stead. The moral challenge is not the pace of progress, but whether our ethical reasoning can keep step with it.

Generative models can now produce art, music, and writing at an extraordinary scale. But they do not ask why. They generate without curiosity, without struggle, without the spark of lived experience. The same applies to workplace automation. Machines optimise; they do not care. In earlier reflections on human dignity and work, I argued that efficiency alone can erode meaning. A society that measures worth only by productivity risks mistaking motion for purpose and output for value.

If technology can perform both creative and physical labour, what remains distinctly human is not the task itself, but the intent behind it. The danger is not that AI will destroy meaning, but that it will tempt us to forget why meaning matters. A civilisation guided only by capability risks producing abundance without direction, a future busy but hollow.

Societies have always tried to turn moral instincts into systems of control, such as laws, norms, and codes of conduct. Such structures are essential, yet they function like fences: they set boundaries but cannot cultivate virtue. Today’s debates about AI governance (data protection, content transparency, liability) are vital steps, but they address behaviour, not belief. A company may follow every rule (like the GDPR) and still build products that erode trust, displace workers, or manipulate emotion.Regulation can restrain excess; it cannot replace wisdom. Moral progress has never come from law alone. It depends on conscience, the inner measure that asks not ‘Is this allowed?’ but ‘Is this right?’ That question is difficult precisely because no algorithm can answer it for us.

We sometimes speak of ‘ethical AI’, but ethics is not a property of code. Algorithms can simulate empathy, but they cannot feel the moral gravity of a decision. The responsibility lies with those who design, deploy, and depend on them. Every decision to automate, imitate, or accelerate carries a moral weight. Choosing to replace human labour with machines, to mimic human faces for digital performance, or to delegate judgment to algorithms may all be efficient. Still, each choice reshapes what it means to act responsibly.

The myth of Eden was never about forbidden knowledge; it was about the temptation to act without accountability. AI tempts us in a similar way: to wield immense capability while distancing ourselves from its consequences. If we are not careful, we risk building a civilisation of plausible deniability, where harm is no one’s fault because it was everyone’s code.

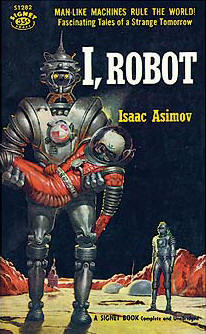

If technology reshapes what we can do, moral education must reshape how we decide. Ethics cannot be outsourced to compliance departments or left to after-the-fact regulation. It must be woven into the design of systems, institutions, and individuals. In science fiction, Isaac Asimov imagined this challenge in his famous Three Laws of Robotics, which encode the protection of humans, obedience to their commands, and self-preservation into artificial agents. These stories show that even imagined machines require ethical guardrails, an insight that applies directly to real-world AI. Just as Asimov’s laws guide robots, our challenge is to ensure that the designers and users of AI carry an equally robust moral compass.

Universities that train data scientists should also train moral thinkers. Companies building AI tools should conduct dignity assessments alongside safety tests, asking how each innovation affects human agency and wellbeing. Policymakers should ensure transparency not only in data but also in intention. Why a system exists, who it serves, and what values it encodes. Most of all, societies must preserve areas of life that remain deliberately, consciously human: care, judgment, creativity, empathy. These are not inefficiencies to be optimised away but sources of meaning that sustain civilisation itself.

Progress requires imagination balanced by humility. The aim is not to slow discovery, but to guide it. A humane future will depend on designing systems that expand freedom, not dependency; that value dignity over mere output; that measure success not only in what machines achieve, but in what people become through them.

Our creations now mirror us with uncanny precision. The question is whether we can still recognise ourselves in what we make. The true measure of advancement will not be how lifelike AI becomes, but how alive our moral sense remains.

The line between what we can and what we should do has defined civilisation from its beginning. It is redrawn in every era, not by algorithms but by conscience. AI grants us unprecedented power to create, to decide, to transform. But the story of progress has always hinged on restraint, on knowing when to stop and on remembering that knowledge without wisdom endangers what it seeks to serve. We stand again before a tree heavy with fruit, surrounded by voices urging us to taste. The choice, as ever, is ours: to pursue what is possible, or to remember what is right.

Author: Slobodan Kovrlija