Now that you understand the origins of AI and the fundamental concepts behind AI, from neural networks to parameters, tokens, and context windows, let’s explore how these technologies are applied in the real world. This is where theory meets practice, where the AI systems you interact with daily become more than just impressive demos but practical tools that can genuinely enhance your work and life. We’ll go over the mechanisms that determine whether an AI can help you with current events, understand your uploaded images, or take meaningful actions on your behalf. Understanding these applications will help you choose the right AI tools for your needs and use them more effectively. Imagine you’ve bought a high-quality car, but you want to optimise it for your specific driving conditions. Consider adding snow tyres for winter driving or adjusting the engine for improved fuel efficiency on your daily commute. Fine-tuning works similarly with AI models. Fine-tuning takes a pre-trained AI model, one that already understands language and general knowledge, and gives it additional, specialised training on specific types of data or tasks. Rather than building an AI system from scratch (which would require enormous resources), organisations can take a powerful existing model and teach it to excel in their particular domain. This approach is central to discussions around building national AI capacity, as it allows countries to adapt global models for local needs. A hospital might fine-tune an AI model using medical literature and patient records (while protecting patient privacy) to create an assistant that can provide doctors with diagnostic suggestions. A legal firm might fine-tune a model on legal documents to help lawyers draft contracts more efficiently. A customer service company might fine-tune an AI on their specific products and policies to create more accurate support chatbots. The beauty of fine-tuning is efficiency. Instead of the months and massive computational resources required to train a model from scratch, fine-tuning can be completed in days or weeks with much more modest hardware requirements. The base model provides the fundamental language understanding, while fine-tuning adds the specialised knowledge and behaviour patterns needed for specific applications. However, fine-tuning requires careful consideration. The quality of specialised training data directly affects the results, and there’s always a risk that the model will become too narrow in its focus, losing some of its general capabilities. Additionally, fine-tuned models need ongoing maintenance as domain knowledge evolves. Just as knowing how to ask good questions makes you a better researcher or interviewer, learning to communicate effectively with AI, called prompt engineering, can dramatically improve your results. This isn’t about learning a programming language; it’s about understanding how to structure your requests for maximum clarity and effectiveness. Think of prompt engineering as the difference between asking a librarian, “I need a book” versus “I’m looking for recent books about sustainable urban planning, particularly focusing on transportation solutions, written for city planners rather than academic researchers.” The second request gives the librarian much more useful context to help you find exactly what you need. Being specific and clear about what you want helps AI models generate more useful responses. Instead of prompting with “Write about climate change,” you might prompt with “Write a 500-word explanation of how electric vehicles reduce carbon emissions, aimed at someone considering their first EV purchase.” This specificity helps the AI understand both the content focus and the appropriate tone and depth. Providing context and examples can often significantly improve results. If you want the AI to adopt a particular writing style, show it an example of that style. If you need it to follow a specific format, provide a template. If you’re working on a complex problem, break it down into steps and ask the AI to work through them systematically. Using role-playing can be surprisingly effective. Asking an AI to “respond as an experienced teacher explaining this to high school students” or “analyse this as a financial advisor would” can help focus the response on the appropriate level and perspective for your needs. Chain-of-thought prompting involves asking the AI to “think step by step” or “show your reasoning,” which often leads to more accurate and reliable answers, especially for complex problems. Few-shot prompting provides several examples of the input-output pattern you want, helping the AI understand the task better than an explanation alone. The iterative approach treats prompting as a conversation rather than a single request. Start with a basic prompt, evaluate the response, then refine your request based on what worked and what didn’t. This collaborative refinement often produces much better results than expecting perfection on the first try. One limitation we discussed earlier is that AI models only know information from their training data, which has a cutoff date. Retrieval-Augmented Generation (RAG) solves this problem by combining AI’s language generation capabilities with real-time access to current information sources.Fine-tuning: Customising pre-built AI

How fine-tuning works in practice:

Prompt Engineering: The art of talking to AI

Key principles of practical prompt engineering:

Standard prompt engineering techniques:

RAG: Giving AI access to current information

RAG works by first retrieving relevant information from external sources such as databases, websites, documents, or other knowledge repositories and then using that retrieved information to generate informed responses. It’s like having a research assistant who can quickly find the most current information on a topic and then help you understand and work with that information. This has significant implications for countering disinformation, as it grounds AI responses in verifiable, up-to-date facts.

When you ask a RAG-enabled system about recent events, current stock prices, or the latest research in a field, it first searches relevant sources for up-to-date information, then crafts a response based on what it finds. This approach dramatically improves factual accuracy for current topics and allows AI systems to work with proprietary or specialised information that wasn’t in their original training data.

Many practical AI applications use RAG behind the scenes. Customer support chatbots retrieve information from current product manuals and FAQ databases before responding to queries. Research assistants access scientific databases to provide information about recent studies. Business intelligence tools pull from current market data to generate reports and insights.

Perplexity AI combines powerful language models with real-time web search, allowing it to provide current information while citing sources. Many enterprise AI systems use RAG to access company-specific documents, policies, and databases, creating AI assistants that can answer questions about internal processes and current business data.

ChatGPT’s web browsing feature and various plugin capabilities are forms of RAG, allowing the model to retrieve current information before generating responses. The key advantage is that responses become more reliable and up-to-date while maintaining the natural language capabilities that make AI interactions so useful.

While RAG significantly improves factual accuracy, the quality of responses depends heavily on the quality and reliability of the retrieved information. RAG systems are only as good as their information sources, which means choosing trustworthy, well-maintained databases and websites is crucial for reliable results.

Until recently, most AI systems specialised in a single type of input: text, images, or audio. Multimodal AI breaks down these barriers, creating systems that can understand and work with multiple types of information simultaneously, much like humans naturally do when we read, look at pictures, and listen to sounds as part of understanding the world around us. A multimodal AI system can analyse a photograph you upload, listen to your spoken question about it, and generate a comprehensive written or spoken response. This integration of different information types enables much more natural and useful interactions than single-mode systems.

In healthcare, a multimodal AI system might analyse medical images, such as X-rays or MRIs, while simultaneously considering the patient’s written medical history and current symptoms described in text, providing doctors with more comprehensive diagnostic assistance.

For education, these systems can help students by analysing diagrams or charts in textbooks, listening to spoken questions about the material, and providing explanations that combine visual, audio, and textual elements tailored to the student’s learning style.

In accessibility, multimodal AI can describe visual content for visually impaired users, transcribe audio for individuals with hearing impairments, or convert between different modes of communication based on individual needs and preferences.

Modern multimodal systems, such as GPT-4 Vision, can analyse images and answer questions about them, describe visual content, read text within images, and even assist with visual problem-solving, like interpreting charts or diagrams. Google’s Gemini can work with text, images, and audio, while Microsoft’s Copilot integrates these capabilities across different Microsoft applications. These systems open up new possibilities for creative collaboration, technical problem-solving, and accessible communication that were previously impossible when AI could only work with one type of input at a time.

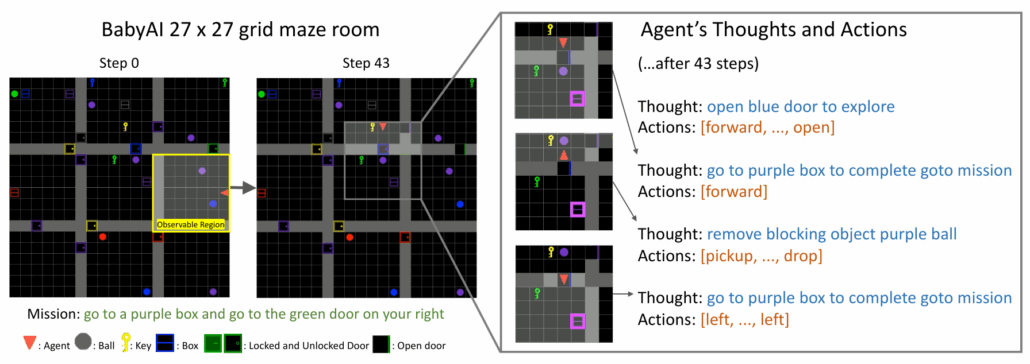

Traditional AI systems are reactive, which means they respond to your questions or requests but don’t take independent action. Agentic AI represents a significant evolution: these are AI systems that can plan, make decisions, and take actions to accomplish goals, often working autonomously over extended periods. Think of the difference between a knowledgeable consultant who can answer your questions versus a capable assistant who can actually implement solutions, monitor progress, and adapt their approach based on results. Agentic AI systems can break down complex tasks into steps, execute those steps using various tools and resources, and adjust their strategy based on outcomes.

These systems typically combine several capabilities: they can plan multi-step approaches to complex problems, use various tools and APIs to gather information or take actions, monitor their progress and adapt when things don’t go as expected, and often work toward long-term goals rather than just responding to immediate requests.

For example, an agentic AI system tasked with planning a business trip might research flight options, check hotel availability, coordinate with calendar systems, book reservations, send confirmations to relevant people, and even monitor for changes that might require rebooking, all while keeping you informed of its progress.

In business automation, agentic AI can manage workflows that span multiple applications and systems, handling tasks such as processing invoices, updating customer records, and generating reports without requiring human intervention for routine cases.

In research and analysis, these systems can gather information from multiple sources, synthesise findings, identify patterns or gaps, and even suggest follow-up research directions, working more like a research partner than a simple question-answering tool.

Agentic AI represents both tremendous potential and important responsibilities. These systems can accomplish much more than traditional AI. Still, they also require careful oversight, clear boundaries, and robust safety measures since they’re designed to take action rather than just provide information. This is particularly critical in global affairs, where the rise of autonomous systems requires new forms of AI diplomacy and governance.

The development of reliable, trustworthy agentic AI is crucial for realising the technology’s benefits while maintaining human control over important decisions and outcomes.

Understanding where AI processing happens affects everything from response speed to privacy to cost. This distinction between cloud-based APIs and on-device processing represents different approaches to delivering AI capabilities, each with important trade-offs.

When you use ChatGPT, Claude, or Google’s Gemini, your text is sent over the internet to powerful servers in data centres, where the AI processing happens, and the results are sent back to your device. This approach leverages massive computational resources that would be impossible to fit in a phone or laptop.

API-based systems offer access to the most powerful AI models with the latest capabilities and updates. They can handle complex tasks that require enormous computational power, and users don’t need expensive hardware since the heavy lifting happens in the cloud. Updates and improvements are deployed centrally, so users automatically get access to enhanced capabilities.

However, this approach requires internet connectivity for AI functionality, raises privacy considerations since data travels to external servers, and often involves usage-based costs. Response times depend on internet speed and server load, and users have less control over data handling and processing.

Increasingly, AI capabilities are being built into devices themselves. Your smartphone, laptop, or tablet processes AI tasks locally without sending data to external servers. Apple’s Siri improvements, Google’s on-device transcription, and various mobile AI features represent this approach.

On-device processing offers immediate privacy benefits since data doesn’t leave your device, works without internet connectivity, provides faster response times for many tasks, and eliminates ongoing usage costs once you own the device. Users maintain complete control over their data and AI interactions.

The trade-offs include more limited AI capabilities compared to cloud-based systems, higher device costs for AI-capable hardware, and slower updates since improvements require hardware upgrades or major software updates rather than seamless cloud deployments.

Many modern AI applications use hybrid approaches, handling simple tasks on-device for speed and privacy while using cloud-based processing for complex tasks that require more computational power. This combination aims to provide the best of both approaches while minimising the disadvantages of each.

For example, a voice assistant might process wake words and simple commands on-device but send complex queries to cloud-based systems. A photo editing app might handle basic AI features locally but use cloud processing for advanced features that require more computational power.

These practical applications work together to create the rich AI ecosystem you encounter daily. Fine-tuning creates specialised AI tools for different industries and use cases. Prompt engineering helps you communicate effectively with any AI system. RAG keeps AI responses current and accurate. Multimodal capabilities enable more natural interactions across different types of content. Agentic AI handles complex, multi-step tasks. The choice between API and on-device processing affects privacy, speed, and functionality.

Understanding these applications helps you make informed decisions about which AI tools to use for different purposes, how to interact with them effectively, and what to expect in terms of capabilities and limitations. As these technologies continue to evolve and integrate, this practical knowledge will help you navigate an increasingly AI-enhanced world while maintaining realistic expectations and making choices that align with your needs and values. The future of AI lies not just in more powerful models, but in more thoughtful, human-centred applications of these capabilities. By understanding how AI gets applied in practice, you’re better equipped to be an informed user and advocate for AI development that truly serves human needs and values.

This completes our foundational series on understanding AI. Whether you stopped after learning the basics or dove deep into practical applications, you now have the knowledge to engage confidently with AI tools while understanding both their remarkable capabilities and important limitations.