This dilemma could be paradoxical as AI has been reducing ‘lost in translation’ via platforms such as Google Translate and Deep-L. We can read text or watch videos in foreign languages. AI translation platforms are becoming part of our daily routines. But, on a deeper level, AI may increase ‘lost in translation’ as it may ignore cultural differences and nuances in intercultural communication. This question is becoming critical as ChatGPT is mainstreamed into daily use worldwide. This risk from AI-accelerated ‘lost in translation’ was clearly stipulated by MIT’s Moral Engine project. In 2017, a group of researchers asked close to 2.3 million people from more than 233 countries and territories to decide whom to save in a self-driving car accident in the following binary dilemmas. Binary dilemmas:Whom to spare in a self-driving car accident?

humans vs pets

passengers vs pedestrians

man vs women

young vs elderly

crossing street legally vs jaywalk

fit person vs less fit

higher social status vs lower social status

Graphics with choice dilemma

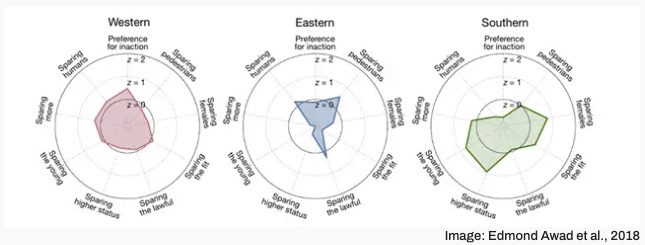

In the concluding analysis, they analysed 117 countries with more than 100 participants in the survey.

The answers are clustered into three cultural spaces: the Western group, consisting of the USA, Europe, and African nations like South Africa and Kenya; the Eastern group, which includes China, Japan, South Korea, South Asia, and the Middle East, and the Southern group mainly with Latin American countries.

When asked about their preference for saving younger vs older people in car accidents, the Western group had a choice for younger people (Canada – 29, UK – 34, South Africa – 38), while the shift towards older people existed in the Eastern group (India – 99, Japan – 2013, South Korea – 110, China – 115).

However, cultural groups were not consistent in their answers. For example, although they belong to the same – Western – group, there was a significant difference in saving the women (France – 2, Germany -74). Also, in Asia, Chinese respondents highly preferred saving people who observed the law compared to other countries in Asia.

While the results of the Moral Machine study triggered critical and controversial comments, it is without a doubt that cultural differences will be a major challenge in the deployment of platforms such as ChatGPT worldwide.

ChatGPT is developed on data gathered mainly from Western sources, from philosophy books and literature to websites. Thus, these values and ways of thinking shape the AI model and the answers that this system triggers.

When they are deployed in different cultural contexts, AGI platforms can clash with local cultural and ethical norms. They can ignore them or, at worst, amplify intercultural biases.

It can lead to cultural misinterpretations and discriminatory outcomes.

First, data sets for AI development should represent cultures and languages worldwide.

Second, oral cultures, especially those from Africa, should be better codified and represented in AI models.

Third, critical philosophical and religious texts that majorly impact specific cultural contexts should get higher ‘weight’ in AI analysis.

Intercultural communication is a delicate and complex question. It requires a lot of nuanced and careful approaches. Failures in managing intercultural communication could trigger conflicts and tensions. Thus, in the good old tradition of tech development, AI should at least not cause harm. If it can improve intercultural communication, it would significantly contribute to better understanding and peace at this critical time in human history.